How maths will make drones and robots safer

By Tatiana Sedelnikov

Autonomous systems are impossible to test in every scenario. A set of mathematical techniques called formal methods can improve safety by identifying potentially risky behaviours, but a special skillset is needed to truly unleash their benefits.

Autonomous systems – from self-driving cars, to delivery drones, to agricultural robots – present a major business opportunity for all manner of industries.

These systems require software to control their trajectory, analyse their surroundings and make real-time, safety-critical decisions in complex environments. Such software can be mind-bendingly complicated, even to its makers. It is easy for bugs to be introduced, or for its designers to fail to build in response protocols for rare scenarios it might encounter.

Such software can also have potentially catastrophic consequences when it goes wrong. In 2018-19, two Boeing 737 Max 8 planes crashed within six months of each other, because a flaw in its autonomous systems led it to misread its surroundings, forcing the plane into a nosedive.

Fortunately, most software bugs and design oversights do not have tragic ends. But they are always a costly pain, resulting in product recalls, expensive investigations and rework, waste, and lost trust from customers.

How do we make safety-critical autonomous software safe?

So, what can we do to increase confidence in the safety of systems that rely on autonomous software?

A key component of any software development is of course testing, where we test the product in defined scenarios to check it does what we want. There are many types of tests that can be done, ranging from testing small sections of code to simulating the whole system and environment. This is important. But it is both laborious and limited since it’s impossible to test – or even imagine – every possible scenario.

‘Formal methods’, a type of approach to software development and analysis based on mathematical techniques, are a useful addition to the development process, complementing conventional testing to help identify – and therefore eliminate – many potential risky behaviours from autonomous software.

What are formal methods and why do they matter for autonomous software design?

Formal methods encompass a wide range of mathematical techniques and algorithms that can increase confidence in computerised systems.

The core idea is to precisely specify a system’s desired properties in formal mathematical terms – much as a structural engineer would use maths to define the forces and stresses affecting a building in its blueprints. The product can then be assessed against that design to check it has the safety properties we want.

As an example, consider a swarm of drones tasked with fighting wildfires. We can formally describe the communication protocol between the drones in mathematical terms. We can then use a theorem prover to show that the drones always agree on an action, such as when to drop water or when to retreat from a threat, even if messages between them get lost. That allows you to quickly detect whether they work as intended or perform outside of their expected behaviour.

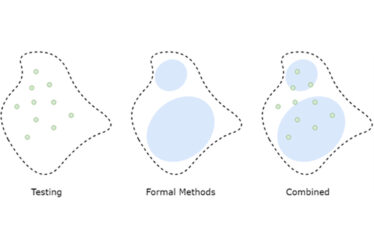

Formal methods have the advantage that you can define a whole class of inputs, rather than testing one at a time. For example, instead of having to test specific orders of messages between drones, where it might be impossible to exhaustively cover all orders, we could prove that any order of messages will lead to a successful agreement. When combined with conventional testing, this creates a much more rigorous testing space, which spots more problems and delivers a safer product (see diagram 1).

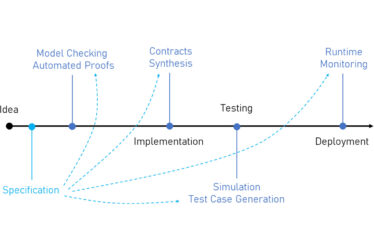

By defining requirements upfront, you can also spot problems early (see diagram 2), reducing redevelopment costs by rewriting code before it becomes embedded in an interconnected system. This already has a track record of success, for example AWS has successfully employed formal methods in the development of complex systems since 2011.

If formal methods improve safety, why aren’t they everywhere?

Formal methods are undoubtedly gaining a lot of interest for autonomous software, and there is a lot of exciting research in the area. However, adoption in industry has been slow.

The main challenge is dealing with the ‘reality gap’. Guarantees about software design are only as good as the initial specification, and it is hard to precisely specify real world interactions in mathematical terms. If you are specifying a system that only consists of software components, such as cryptographic systems for example, a complete model is feasible as you can describe all components mathematically.

But precisely defining software to control an autonomous drone that will roam forests encountering all manner of obstacles, weather, and light conditions, is much harder, as the real world is complex and impossible to perfectly specify. Just consider the infinitely many possible configurations trees can have in a forest, or the infinitely many things that could go wrong during the transmission of a message when a drone is damaged by fire.

Coming up with a good specification and applying appropriate tools to it for verification also requires a wide array of knowledge which isn’t in every software developer’s toolkit, another reason formal methods are taking time to filter through into software development.

But that doesn’t mean formal methods can’t be useful.

How can we try to overcome these challenges?

Even where a system cannot be precisely defined, we can still come up with abstractions – simplified mathematical descriptions that focus on the most relevant aspects of the system and the world around it. Using domain knowledge – obtained from years of experience in a field as well as testing – we can identify which aspects matter most for safety. We can also identify components of a system that are more suited to specification than others, allowing for at least partial verification.

Going back to the example of fire-fighting drones, looking at the messages between them only and ignoring the environment is one such example of isolating a system component to specify. If we do want to look at the environment, we can focus on critical aspects of it such as temperature changes to approximate distances to fire (instead of exact modelling of the fire spread).

We can also combine simulation and formal methods by using specifications to generate scenarios for the simulation, identifying scenarios that uncover gaps in the abstracted specification. This again shows that the way forward is to have a wide range of methods in your toolkit when it comes to improving safety.

Formal methods can improve safety

Autonomous systems are complex. By their nature, they deal in probabilities and uncertainties rather than ‘if-x-then-y’ logic. Formal methods may not catch everything, but combining conventional testing and formal methods will, in most cases, discover more bugs than if you used either of them separately, significantly reducing the probability of something going wrong post-launch. They are therefore a valuable tool to provide safety assurance and catch problems early in autonomous systems.

In the next blog on this topic, we will illustrate the use of probabilistic model checking as an example for a formal method useful for quantifying risks and uncertainties.