In the old days, inventors took risks. The Wright Brothers flew their own planes, fuelled by belief in their designs. Less successfully, parachuting pioneer Franz Reichelt tested his parachute suit design by jumping from the Eiffel tower, only to plummet 57 meters to his death.

Such things do not happen today. Safety cases for new technologies are conducted with extreme rigour before lives are put on the line. And few more so than for assisted and autonomous driving.

Safety in autonomy is not just about the vehicles, but a whole variety of subsystems – from incident detection and hazard alerts, to steering and braking systems, to smart bumpers and lights.

Anyone who can prove their system offers even a 1% safety improvement will find a receptive market in automotive. But testing safety systems is challenging, since it means proving your system reduces the chance of an already rare event (i.e., an accident).

Theoretically, you could do that by driving two cars – a control car and one fitted with your safety system – for many miles, and measuring differences in driving quality or accidents. But that would be impractical and unethical. We must prove new systems are safe without putting anyone in danger. The way to do that is simulation.

Simulators – the human challenge

Simulators are becoming closer to replicating real life. They can mimic the laws of physics – what will happen in a collision, say. But they struggle to mimic the unpredictable behaviour of humans.

Whilst completely self-driving cars may eventually become the norm, the next generation of autonomy will be more about assisting humans to drive more safely and efficiently. Simulations of such technologies must therefore include studying their effect on humans - it is no good having a driving assistant that lets the driver stop paying attention to the road, if it needs that attention to kick back in in an emergency.

Building effective simulators to test safety systems on humans

A recent TTP project illustrates the challenges of developing reliable simulations for human factors.

We set out to explore driver reaction times. It is widely recognised that a driver's reaction time reduces accidents. An often-quoted - though hard to prove figure is that a 1.5 seconds reduction in reaction times could reduce accidents by 90%. We wanted to understand the effect of distraction on reaction times, so we could test safety systems that aim to improve them.

First, a cautionary tale. We started with an off-the-shelf driving simulator and a gaming steering wheel, controls and pedals. An experimenter noted driver attentiveness and reaction times, first while the driver was focussed on driving, and then while the driver was forced to complete an auxiliary task. The data was terrible. Drivers complained that the simulation was inaccurate, and the mental load created by dealing with its flaws made observations about distractions invalid.

We needed to ensure the driver’s mental load was representative of real-world driving, and we wanted more granular data. So we built our own simulation using Unreal Engine 4.

Simulation drivers drove around a virtual environment in a truck. Hazards were presented in front of the vehicle at random - such as a roadblock, which the driver had to acknowledge by pressing a button. We also introduced an on-screen distraction that required the driver's attention – a task to select the missing one of the four buttons displayed in the top right corner - mimicking the distraction potential of a mobile phone.

This allowed us access to driver performance metrics in real time and gave us control over experimental conditions. With this, we were able to collect millisecond-accurate driver reaction time data in response to hazards on the road.

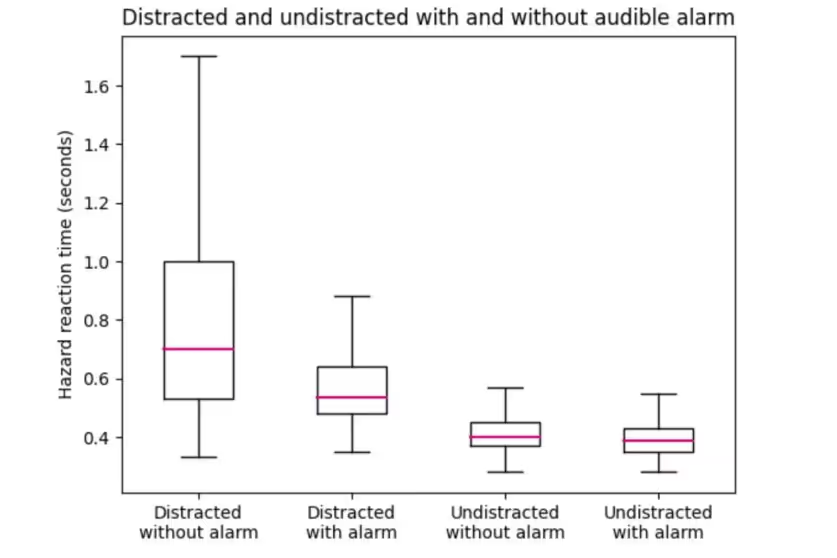

We gathered data on reaction times to unexpected hazards, with and without distraction. The data was striking – showing distractions caused over one second decrease in the 95th percentile reaction time. It’s these outliers that cause accidents.

Given distractions happen frequently - from getting a phone alert to a witnessing a commotion on the street - this relatively small-sounding effect could cause a lot of accidents when scaled up across the driving public.

Having shown that distraction does indeed increase accidents, we could then use the simulation to test Advanced Driver Assistance Systems (ADAS).

The custom simulation environment let us incorporate software-in-the-loop driver assistance technologies. As a proof-of-concept, we modelled a hazard perception and driver alert system, which raises an alarm in the case of distractions such as mobile phone use or eyes not on the road (shown in Figure 1).

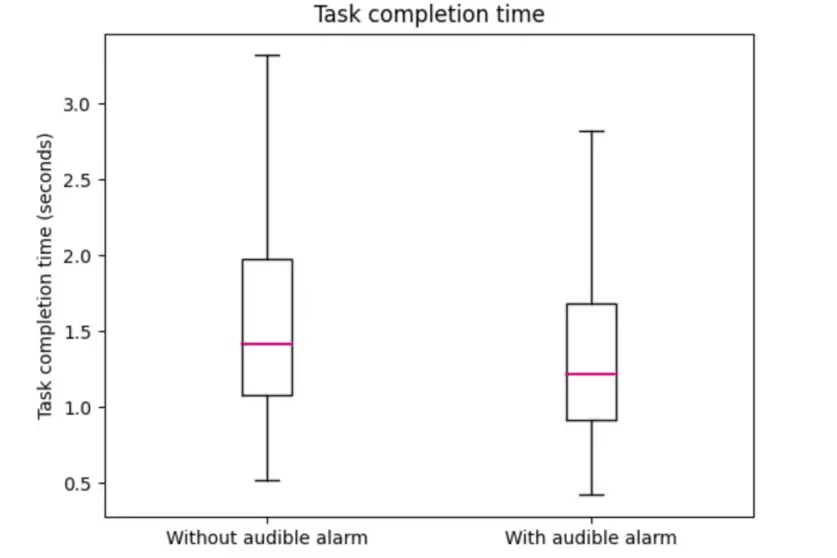

We measured driver reaction times with and without the system; distracted and undistracted. With driver alerts, we saw a reduction in undistracted driver reaction times, suggesting that the hazard perception system contributed to driver safety. More strikingly, we saw a reduction in the worst distracted reaction times, showing that a hazard perception system could mitigate some of the highest risks from driver distraction.

Conclusion – good simulators are key to credible safety cases

A simulation system like the one we built can be used to measure the impact of a new ADAS system on driver safety, without having to trial risky distracted driving behaviours on the road. It can help build safety data that could convince customers – such as vehicle makers or transport companies – of the product’s value in reducing accidents, and so justify a purchase.

This is one of many challenges around building reliable simulation for autonomous systems. The point we are making is that it is hard to build simulators that model real world challenges. It’s important, therefore, to draw on experience, both to build the initial simulators, and to be able to recognise when they are not working and find solutions.

Want to learn more about our simulator and simulation capabilities?

We have demonstrated a new simulation environment that can be optimised for a variety of ADAS testing use cases. We wish to collect a larger number of repeats across more drivers and demographics, as well as to build other simulations for other use cases. We welcome partnerships with parties who would benefit from our approach, or would be interested in exploring new opportunities in this space.

.avif)